As our organization grows and we continue to add and manage more complexity to the 10x SuperCore™ platform, it becomes increasingly important that we take the time to pulse check our processes and ensure they are still useful at each phase of our development.

In the recent weeks, we spent some time thinking about how we could scale up our API Design Review Process to handle the increase in feature development. In this post, we're sharing the insights we've gained while improving this process and striving to keep the same level of feedback quality, consistency and lead time.

How we used to review our APIs

The API Design Review is like a code review but for an API specification. During the review, developers other than the API Designers inspect the specification to find any errors and improve the overall quality.

While the main goal of the review is to find defects with the design [1], there are other advantages like increased team awareness and knowledge transfer, improved consistency and usability but also the gain of insights into alternative solutions to challenging problems [2].

To get all these benefits, each feature team responsible for designing their own internal APIs would request reviews from our centralized API Gateway team. Once an internal API is approved, an implementation exposes and publishes it as a public REST API while abstracting complexities from our clients. Our earlier process had the designers submit these specifications to the API Gateway team through a collaboration tool (Swaggerhub) which allowed any contributor to leave comments and recommendations.

It is important to note that this process was mostly asynchronous because the reviews happened in absence of the feature team designing the API. Besides this, the process involved just the designers and the API Gateway team which was becoming a bottleneck to review and approve. However, with time, we've come to observe this process was not without issues since the increase in API development, meant there was also an increase in the need for more collaboration, clearer communication and better knowledge sharing.

Taking the pulse of our review process.

Regardless of how mature a process is, it's probably a good idea to have an appropriate way to receive feedback and be able to use it to make improvement decisions. In our case, we ran a survey which asked for quantitative and qualitative feedback from API Guild participants and other stakeholders usually involved in the design review sessions.

- What’s your role in 10x?

- Have you participated in an API design review session with the API Gateway Team?

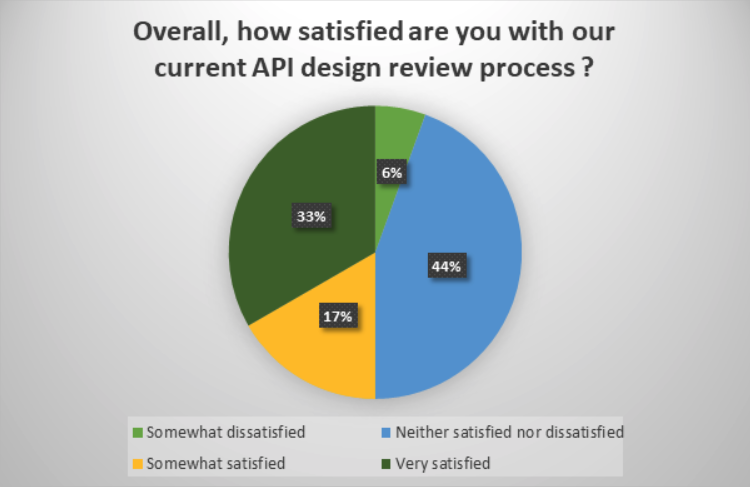

- Overall, how satisfied are you with our current API design review process?

- What is the reason behind your overall satisfaction level?

- How satisfied are you with the level of collaboration across teams in our current API design review process?

- What is the reason behind your answer to question 5?

- How satisfied are you with the level of API knowledge sharing and learning in our current API design review process?

- What is the reason behind your answer to question 7?

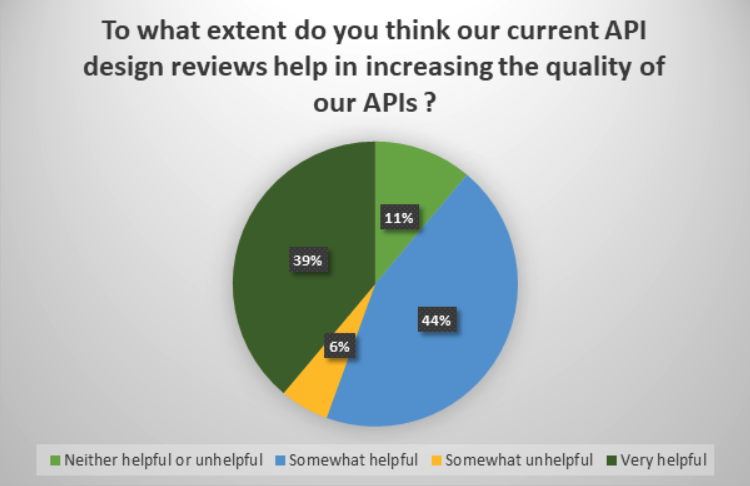

- To what extent do you think our current API design reviews help in increasing the quality of our APIs?

- Please provide any other comments or suggestions on any aspect of our API design review process.

Quantitative Results

The majority of our active API Design community is made of Software Development Engineers with 50% of the answers to the questions above being submitted by them with other answers given by Product Owners, Software Development Managers and Technical writers.

While most of the people involved in the survey rated as very satisfied or somewhat satisfied in the actual review process, a similar proportion of people said they are somewhat dissatisfied or neutral.

Qualitative Feedback

Collaboration

The key themes in the answers related to this topic showed us that we had a good level of satisfaction in how we collaborate and facilitate the question/resolution process. However, it also uncovered key players like representatives from Security or some Principal Engineers that weren't involved at the right times during the review lifecycle.

“As the end consumer / user of the APIs in question, I feel like we should potentially be engaged earlier in the process”.

The hard dependency on specific people who brought their knowledge and resources to the sessions, combined with the need for better documentation, meant that the process could also get confusing at times.

“I think we need to democratise it and break the hard dependency on particular people, their knowledge and teams.”

Quality improvements and knowledge sharing

When it comes to learning and knowledge sharing, we're lucky to have talented people whose contributions have not gone unnoticed. Our API standards are well documented and available as reference for anyone however, we could improve the way we share our learnings from the API Design sessions to people who did not participate.

With these sessions we’ve managed to increase our confidence in the standards we have for the quality and delivery of our APIs. However, there were still some places where these standards were not applied yet.

Reflections on survey results

Our main goal during this improvement phase was to increase collaboration and stakeholder satisfaction while also becoming better at sharing the lessons we took from our sessions. So, after this survey we concluded that an asynchronous process where reviewers would leave written comments on an API specification was less efficient and ended up becoming challenging because the API Designer was not present to give more context around the solution.

A more synchronous review with all stakeholders would help to get issues resolved much faster. We could involve key downstream teams who are interested in using the new design and also the documentation and developer experience teams that are building other internal and partner applications.

Introducing our new Open Review process

The improved API Design Review Process would involve similar actor roles however we would invite more key players at an earlier phase.

- API Designers - the feature teams designing and building a new API

- API Reviewers - API Gateway team, technical writers, API consumers

- Moderator - the person who manages, facilitates and promotes the session

Review Process

- API Designers share a new specification in Swaggerhub

- A ticket containing all information around the context of the review, is created and shared on a dedicated internal channel (like the approach at Slack [4]).

- API Reviewers can leave their comments and questions in the ticket at this time

- The moderator schedules a new Review session where the goal is to go through the context, API descriptions and other elements like paths, methods, request/response has schemes, etc. Any required changes can be addressed during or after the meeting depending on the scope

- Once an API is accepted, it is published as a read only contract document

- The Moderator shares learning notes from the API Review, suggests updates to the API standards, and updates to our automated linting rules.

Using the new process, we have managed to enrich our review discussions and create a more engaged forum where we can learn more about API Design. We're now also avoiding any review->update->review loops by having a live session where we experiment with different solutions and clear up any issues on the spot.

This subtle cultural change has created a sense of a shared design as now both reviewers and designers can make changes and explore solutions together. This evolution of our API review from a design defect finding process, to a collaborative session where we explore alternative endpoint descriptions, operations, paths, requests and responses has led to improved quality in our API designs.

Finally, with all the insights we gained from these meetings we have increased coverage of our Swaggerhub Custom Validation rules, which serve as our API linting tool helping us to automatically validate design guidelines in our API specifications. This ensures that the knowledge from our review sessions is shared in an effective way and provides API Designers fast feedback through errors and warning messages.

Conclusion

Building and improving a collaborative process is not something that is done overnight. It takes brainstorming, consensus from peers and multiple iterations to get to the right version that works with your current phase of development. The initial feedback on the changes introduced has been positive.

However, the burst in demand for reviews has increased the need for cross-team coordination and some new ideas for improvement were already suggested. That’s why we plan to rerun the survey in a few months' time to gather more information about where we could improve next. Until then, we will use these gained benefits to deliver better quality APIs and ensure we continue building this process as we go along.

References

- Fagan, M.E., 1999. Design and code inspections to reduce errors in program development. IBM Systems Journal, 38(2.3), pp.258-287.

- Bacchelli, A. and Bird, C., 2013, May. Expectations, outcomes, and challenges of modern code review. In 2013 35th International Conference on Software Engineering (ICSE) (pp. 712-721). IEEE.

- Macvean, A., Maly, M. and Daughtry, J., 2016, May. API design reviews at scale. In Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems (pp. 849-858).

- How We Design Our APIs at Slack - Slack Engineering. (2021). Slack Engineering. [online] 11 Aug.